Something that has a tendency to be forgotten by ambitious developers that wants to deliver quickly on user expectations. This is why it is important for your data driven readiness initiative to also plan for how you are supposed to operate and maintain the developed data products/use cases over time.

If you can include this early in the process, it makes it a lot easier for you when it is time to put things into production and continouous evolvement. Especially as this might include other divisions, departments or functions within your organisation. These might need to allocate resources and you may need some time to convince them about the greatness of this specific initiative versus their other assignments.

DataOps and MLOps

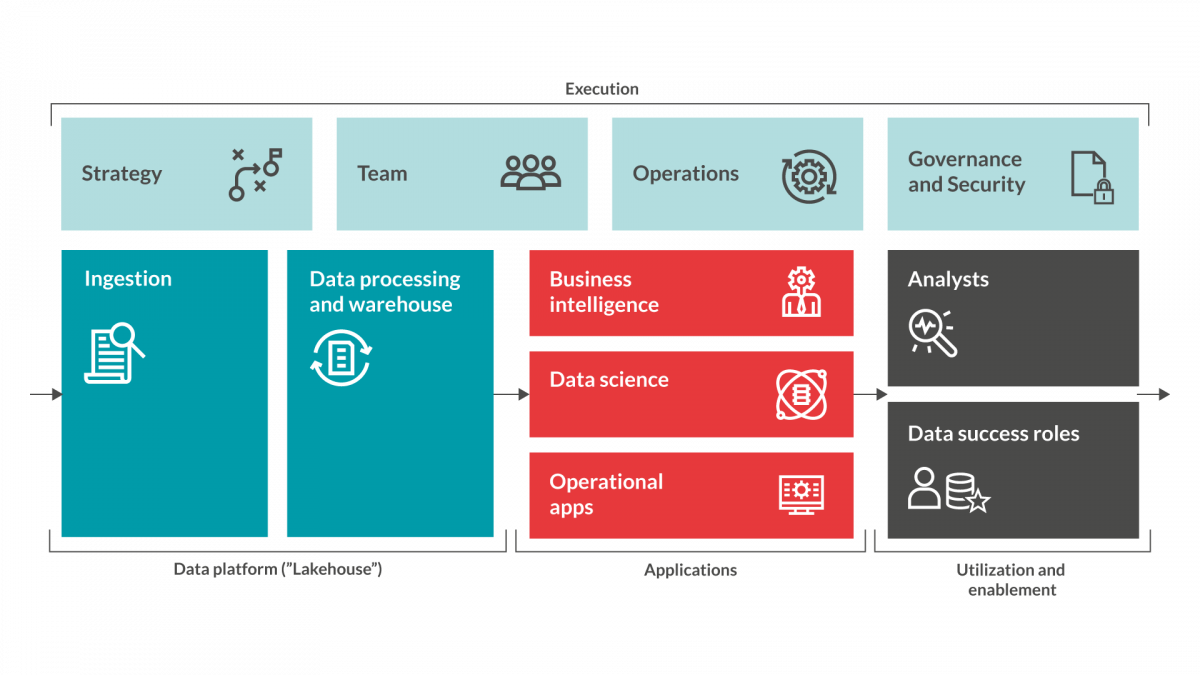

Popular terms when talking about operations in relation to implementations of data-driven initiatives are ”DataOps” (short for Data Operations) and ”MLOps” (short for Machine Learning Operations). The idea is to have methodologies, routines and tools to manage the specific processes related to running data models or machine learning models as well as ingestion of new data into these models.

In all its simplicity the routines related to running ”DataOps” or ”MLOps” is very close to the routines normally implemented for running other applications within your organisation. What may differ is that there are other roles/persons involved in the management and maintenance of these environments. Rather than the usual developers, operations or ”DevOps” staff, you might have Data Engineers or Data Scientists that wants to update the scripts, software or data used to run analysis in the environments.

Also in the initial phases of the initiatives, or if you are a smaller organisation, it is often the same resources that do the actual development that also establish and maintain routines for updates, new versions, adding new products, adding data and so forth. This is especially true for data teams; it is often even more difficult to split the team between development and maintenance for a data team compared to a tech team.

Establishing routines and maintenance

No matter if you are a large or small organisation, it is important that you establish routines for operating and maintaining your system environment as early as possible in the process. You should also make sure to include routines for how to update both software and data models. Looking into a DevOps style of work for this is a good idea as there are often already methods and routines in place within the organisation.

A DevOps kind of work style is also adopted to cater for many and quick changes in a system environment without affecting the stability of the environment. Similar to what you can expect in a ”DataOps” or ”MLOps” environment. A good idea can be to ask your Data Engineers and/or Data Scientists to sit down with existing DevOps team in your organisation to share experience and sync procedures. If these routines do not exist, this is also something that can be driven by the Data driven readiness initiative and if required there are external resources available to assist.

What strategy, data products and architecture decided for your data products/models/use cases, will also have a signficant impact on the level of complexity & scope one will require in the DataOps aspects of the data initiative. For example, the more time critical a data product is decided to be, the more advanced monitoring is needed.

Or additional complexity of data models, or simply the number of them, will also put additional requirements on DataOps routines.

Mission critical and available 24/7

Similar to what expect from any mission critical system environment you should also make sure to implement guidelines and routines for monitoring, alerts and error handling of your analytics environment. This will give you notifications before critical issues arise and a chance to attend to potential issues before they affect the stability of the environment.

As your initiative evolves and delivers interesting insights for decision makers within your organisation, so does the importance of the environment and the value it delivers. Don’t be surprised if decision makers soon reckon that it is mission critical and expect it to be available 24/7. For this it is good to have the routines for continuous operations of the analytics environment in place already from the start.

From the start the analytics environment can be managed by the same resources that do the actual development, but over time it needs to be incorporated in corporate routines for operations and maintenance.

More information

Redpill Linpro is launching our Data driven ready model in a viral way by releasing a series of blog posts to introduce each step in the model. This is the fourth post in this series. Below you will find a ”sneak-peak” into the different steps of the model. Stay tuned in this forum for more information on how to assure Data driven readiness...