This isn’t about demonizing AI or cloud computing. They are transformative technologies that open doors we couldn’t even imagine a decade ago. But if we want to keep benefitting from them in the long run, we need to understand the trade-offs—and learn how to innovate responsibly.

The energy behind intelligence

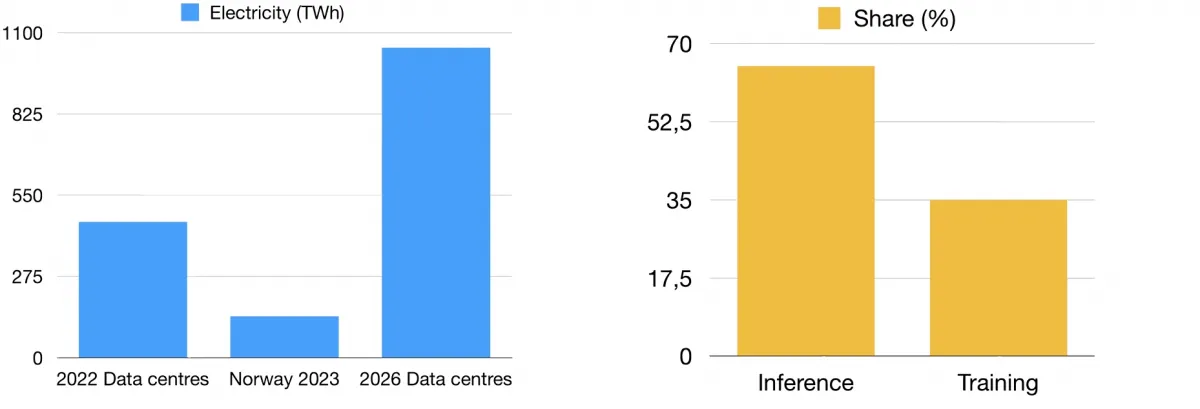

In 2022, the world’s data centers consumed about 460 terawatt-hours of electricity—roughly three times Norway’s annual power use. By 2026, that figure could pass 1,000 TWh. That’s not just an abstract statistic: it represents pressure on local grids, rising demand for renewable power, and difficult trade-offs between industries.

AI plays an increasingly large role here. Early estimates suggested a single ChatGPT query used ~3 Wh, about ten times more than a Google search. More recent data for today’s models point closer to ~0.3 Wh—in the same ballpark as a search query. But the real story isn’t about any one question. It’s about scale. Multiply that cost by billions of queries a day, and the numbers add up quickly. Even more striking is the shift in where AI’s energy is used. A few years ago, most of the electricity went into trainingmodels.

Today, inference—the act of answering our questions—accounts for as much as 60–70% of total consumption. In other words, it’s our daily use that drives the footprint.

Water: the hidden ingredient

If energy is the obvious cost, water is the hidden one. At first glance, it seems odd—what does water have to do with AI? The answer lies in cooling.

Many large data centers rely on evaporative cooling, which consumes 1.8–2 liters of water per kWh of electricity. In cooler climates like the Nordics, closed-loop systems are more common, with far lower water loss. But in warmer, drier regions, the demand can be significant—and often, these are the very places where water is already scarce.

One study in 2023 suggested that 20–50 AI queries could consume half a liter of fresh water, a comparison that quickly caught the public’s imagination. Newer systems may be less thirsty, but the message stands: AI is not an abstract digital activity—it carries a real, tangible water footprint.

Cloud providers are responding. AWS reports a Water Usage Efficiency (WUE) of 0.15 L/kWh, and says it returned 4.3 billion liters of water to local communities in 2024. These are important steps, but they don’t include the water used upstream in electricity production. Sustainability reports, in other words, don’t always tell the full story.

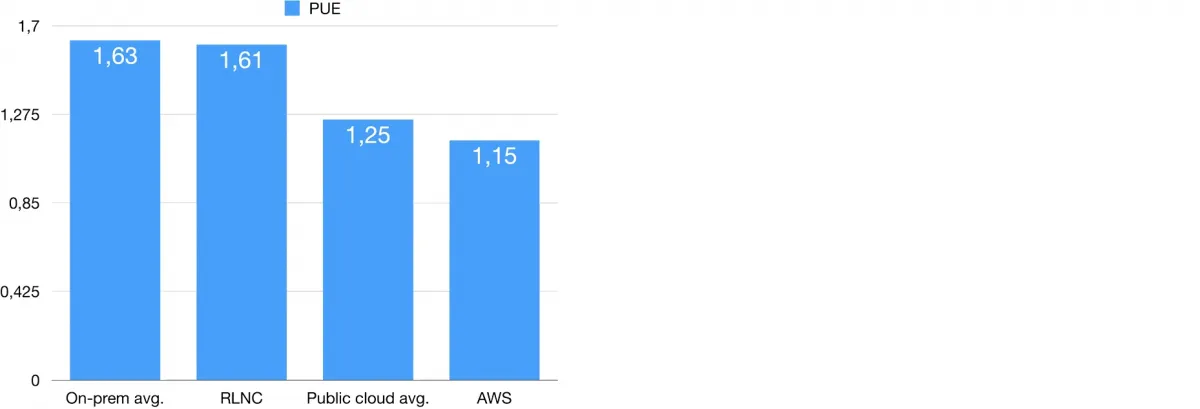

Efficiency gains, rising emissions

The hyperscalers—AWS, Microsoft, Google—are racing to improve efficiency. AWS, for example, claims a global PUE (Power Usage Effectiveness) of 1.15, better than most cloud peers and far ahead of traditional on-premise data centers. They highlight advances such as Graviton processors (up to 60% less energy per task) and direct-to-chip liquid cooling, which reduces cooling energy by nearly 46%.

These innovations matter. They show that it’s possible to push computing closer to sustainability. But here’s the paradox: despite these gains, absolute emissions keep climbing. Amazon’s reported carbon footprint rose from 64 to 68 million tons CO₂ between 2023 and 2024. Efficiency alone can’t outrun demand.

A Norwegian perspective

Norway has a natural advantage in this debate: 88% of its power comes from hydropower, with total production of around 140–156 TWh annually. But even here, tension is growing as data centers scale.

The TikTok facility in Hamar is a case in point. At full capacity—150 MW—it could consume nearly 1% of Norway’s annual electricity. For one facility, that is significant.

Yet perspective matters. Norsk Hydro, one of Norway’s industrial giants, uses around 13% of the nation’s power. AI and data centers are big players in energy demand, but they are far from the only ones.

What we can do now

The good news? We’re not powerless. Developers, organizations, and policymakers can all take steps today to reduce the footprint of AI and cloud workloads:

- Choose the right model. Use task-specific models where possible; reserve large language models for when they truly add value.

- Optimize and autoscale. Eliminate idle workloads, batch tasks, and make use of autoscaling to save both power and cost.

- Adopt efficient hardware. Energy-optimized processors can cut consumption by up to 60%.

- Run workloads wisely. Place jobs in regions with renewable grids, and schedule heavy tasks when renewable supply is abundant.

- Monitor water. Track water usage efficiency and reduce stress in water-scarce regions.

Looking ahead

Generative AI and the cloud are here to stay. They will continue to shape how we work, create, and innovate. But they are not weightless. Every prompt has a cost in electrons and liters.

The challenge isn’t to slow innovation—it’s to balance it. To celebrate progress while acknowledging limits. To combine the Nordic advantage in renewable energy with conscious engineering choices.

If we can do that, we may yet enjoy the best of both worlds: the promise of AI, and the responsibility of sustainability.

(This blog post was – of course – helped along by ChatGPT at a cost of approximately 3 Wh and, provided that evaporative cooling was used, ~6 mL of water. This could instead have been used to run a 10 W LED for ~18 minutes)